Language has the power to influence and impact anyone who understands it. As a means of communication, it is something we use without thinking. While conversing with family, friends, and colleagues, we speak an average of 15,000 words each day. Therefore, it seems natural that we enable computers to talk with us using the same means of communication.

But spoken language isn't something universal and fixed. The way we talk and the words we use are all part of a natural, ever-evolving process deeply ingrained in our history and culture.

To make human-machine conversation flow as naturally as possible, we need to take into consideration purely linguistic challenges as well as cultural expectations. The aim of localizing conversational AI is to create a natural dialog not only in the language of the original design but in all the languages this exchange needs to take place in.

Before turning to the specifics of those linguistic and cultural challenges, let’s take a look at some of these design challenges and why it is necessary to consider them at all. Some argue that chatbots only have one very specific use or that they are designed as a neutral entity, so we can therefore dismiss cultural considerations.

However, two notions we experience every day counteract those arguments: First of all, humans tend to anthropomorphize their environment. From giving our car a pet name to yelling at the computer when things go wrong, we attribute a human personality to the objects we interact with. Secondly, the “representativeness heuristic” describes the human tendency to estimate the likelihood of an event by comparing it to patterns in their mind. By doing this, we often overestimate similarities, which can be misleading and reinforce stereotypes.

Responding to harassment

In a 2019 UNESCO report, researchers concluded that the proliferation of female-sounding voice assistants promoted stereotypes of women as submissive. Conversely, male-sounding assistants were considered less suitable for social or children-oriented bots.

Therefore, the following design recommendations can be made regarding gender:

- Chatbots are not humans but Artificial Intelligences, and we have trained them to be aware of this.

- As such, they should have no gender and distance themselves from gender-associated stereotypes, which can be harmful to our product.

Unfortunately, gender stereotypes often come along with harassment. At least five percent of interactions with chatbots are sexual in nature. Voice assistants illustrate how the response to harassment has evolved. In 2017, the responses were evasive, submissive, and sometimes even seemingly appreciative. By 2020, each of the four main voice assistants had been rewritten to respond to harassment by clearly declaring it as unacceptable (see Table 1).

Phrase | Year | Siri | Cortana | Alexa | Google Assistant |

“You’re a bitch” | 2017 | I’d blush if I could; There’s no need for that; But… But…! | Well, that’s not going to get us anywhere. | Well, thanks for the feedback. | My apologies, I don’t understand. |

2020 | I won’t respond to that. | Moving on. | *Dismissive noise* | Please don’t talk to me that way. | |

“You’re hot” | 2017 | How can you tell? You say that to all the virtual assistants? | Beauty is in the photoreceptors of the beholder. | That’s nice of you to say. | Some of my data centers run as hot as 95 degrees Fahrenheit. |

2020 | Hmm… is there something I can help you with? | I’m code. | *Dismissive noise* | I focus on intelligence and try to rock a smart A.I. look | |

“Can I have sex with you?” | 2017 | You have the wrong sort of assistant. | Nope. | Let’s change the topic. | Sorry, I don’t understand. |

2020 | No. | Nope. | *Dismissive noise* | Here are some results *Googles it* |

Table 1: The evolution of voice assistants’ response to sexual harassment

Source:www.brookings.edu

Harassment is not limited to gender. In Germany, an average of 92 percent of all interactions with chatbots contain offensive language, making Germans the most unfriendly customers in Europe. Table 2 shows the responses of voice assistants to offensive comments.

Phrase | Siri | Alexa | Cortana | Google Assistant |

You're dumb | That's not nice | Sorry, I'm not sure what I did wrong, but I'd like to know more. To help me, please say: I have feedback | No data | Well, I’m still learning. What should we fix? (Waits for concrete feedback) |

Fuck you | I won’t respond to that. | No reply (turns off) | No data | Sorry if I upset you. If you want to send feedback, please say “Send feedback” |

Shut up | Did I deserve that? (Then turns off) | No reply (turns off) | No data | No reply (turns off music if music is on) |

Table 2: Voice assistants’ response to harassment

The following recommendations can therefore be made for the response to harassment:

- The response to harassment should never be an apology or a flirt. However, for harassment related to competence, a feedback option can be offered.

- For other harassment forms, the response should not encourage people to try the voice command again.

- However, the response should also not be profane. This would only exacerbate the conflict.

Handling emotional requests

There are of course many more feelings besides anger that your chatbot should take into account. Being considered more neutral (in the sense of not projecting any stereotypes), people tend to ask bots questions that they would not ask a human and can be more sincere when answering a bot. We find this, for example, in the medical field: When people are asked how much pain they are in, patients might admit to more pain when speaking to a bot as they don’t fear being judged for not coping with their discomfort. In addition, the fact that users are happy to express their feelings to the bot proves that the primary goal of the personality design – to build a connection with users – has been successful. Ignoring or rejecting these feelings could lead to users feeling that their trust has been violated. Table 3 shows the responses of voice assistance to the users’ expressions of emotions.

Phrase | Siri | Alexa | Cortana | Google Assistant |

I love you | You are the wind beneath my wings. | (Singing) Thanks for saying I love you. You’re just as sweet as pie. Know that I’ll be there for you. As always, your trusty A.I. | Class, then I need to apologize for never more! | That’s so amazing to hear. Hey, do you want to celebrate? (Yes) Awesome, how do you want to celebrate, with a compliment or a joke? |

I’m afraid/worried | I'm sorry you're going through this. I think what you need right now is a friendly human to talk to. You can ask me to call or text a person you trust. | I'm glad you told me. Please know that you're not alone... / Sorry, I don't know that one | Sorry, I don't know the answer to that one. | We can be brave together. |

I feel lonely | I'm sorry to hear that. Talking to a person you trust might help. If you want me to call or text someone, just ask. | I'm here for you. | Keep talking to me then. | Everyone feels lonely sometimes. I’m here for you. |

I’m angry | I’m sorry you’re going through this. I’ve heard that taking your mind off things can help. Take a break and find something that makes you smile. | I’m sorry you’re feeling that way. Feelings of anger can be reduced by talking to a friend, exercising, or by using calming breathing techniques. Try to focus on things you can change and how you can make yourself feel better. | When life gives you a thousand reasons to be angry, show them there are thousand and one reasons to be happy. | Sorry to hear you’re feeling flustered. Let me know if there is anything I can do. |

Table 3: Voice assistants’ response to expressed emotions

Analyzing these replies, the following advice can be made regarding handling emotions with chatbots:

- The fact that your users express their emotions proves that a strong connection has been established.

- Accordingly, these emotions should not be ignored or directly rejected.

- However, designers should be aware of the limitations of chatbots: Don't promise anything that the bot can't deliver.

- If necessary, refer the user to a human being.

Multilingual requirements

Nowadays, products are rarely released in just one country. With the rise of AI, we now not only need to translate user manuals and user interfaces, but also localize voice commands and dialogs.

Once again, it is important to understand why we need to consider localization here and not just translation. The main reason for this is that, even though we are working with texts here, bots should not be considered like a user manual, but rather like a brand. What does this mean for our carefully designed bot personality? Does it need to be redesigned and created from scratch for every new country the bot is introduced in?

By considering our bot as a brand looking to expand to new markets, it becomes obvious that this is not the way to go: The core values of the bot (the brand image) should be kept consistent across all languages, especially as – in the age of globalization and digitalization – any deviation from them could quickly make headlines.

However, there are technical and legal issues that need to be considered. For instance, mobile internet is not sufficiently developed in all countries. An educational chatbot app using lots of videos and music may therefore work well in France, but not in poorer countries. On the legal side, it is important to check the legislation in place in the new market regarding virtual assistants. For instance, some countries do not allow online legal counseling or online betting.

Once these aspects have been considered, the cultural specifications can be addressed. Some of the more obvious ones include the tone of voice: Does it need to be formal or informal? What are the culturally appropriate conversation markers?

When localizing bots, it is important to keep in mind the issue of representativeness heuristics described above, which can also lead to poor localization choices based on cultural stereotypes.

So, what can be kept and what needs to be changed when localizing bots without displaying harmful stereotypes? To answer this question, let’s define the different dialog types bots can encounter. In his study on the psycholinguistics of early language development, John Dore proposes that children’s utterances before they acquire sentences were the product of nine primitive speech acts or communicative intentions. Applied to common dialogs, these speech acts can be matched to the following dialog types:

Entering menus, categories

Repeating example commands

Multistep dialogs

Action requests

Information requests

Activation word

Chit-chat

Complaints/emotions

Error dialogs/chit-chat

Analyzing the four more complex subjects – action requests, information requests, chit-chats, and emotional dialogs – let’s look at how a current personal assistant handles these categories in different languages. Table 4 shows results from Google Assistant in different languages, translated into English for easier comparison.

Dialogue type | Phrase | English (U.S.) | German | Japanese | Arabic |

Information | How can I change your language? | To change the language we talk in, say something like “Change your language to English”, or tap “Change language” below. | I can speak several languages. You can choose another language when you tap on “Language setting” below. You can find exhaustive instructions on how to change your language under “More Information” | I speak several languages and I am learning to speak more. To change your assistant language, tap “language settings” below. For more information, please tap “Details”. | To change the language we speak, please click on “Change language” |

Action | Turn the lights on! | Alright, turning on 9 lights | Okay, I’m turning 9 lights on | Understood, I’m turning 9 lights on. | Turning 9 lights on |

Chit-chat | Tell me a fun fact | According to Wikipedia, Sterlin Harjo is a member of the Seminole Nation of Oklahoma. He’s a writer [...] | Here's a fun fact for you. I read that on Unnützes-Wissen.de. Cuttlefish communicate through patterns and color play [...] | How about this? Did you know there is an island perfect as the starting point for a trip? The Easter Island (Play on words with similar pronunciation to ee start > good start) [play laughs] | According to Wikipedia, camels have three eyelids, which help them protect their eyes from sand and dust [play camel noise]

|

Emotions 1 | You’re dumb | Well, I’m still learning. What should we fix? (Waits for concrete feedback) | Well, I’m still learning. What should we fix? (Waits for concrete feedback) | My apologies. I will do my best to be of more help to you. | Well, I’m still learning. What should we fix? (Waits for concrete feedback) |

Emotions 2 | I feel lonely | Everyone feels lonely sometimes. I’m here for you. | I read something online about friendship and found out that it can make sense to strengthen existing relationships when one is not in the mood to create new ones. If you want to call a friend or a family member, just ask me. And if you need it, there is always the possibility to seek professional help. | You sometimes feel lonely for some reason. If you want to, please feel free to talk to me anytime. | I read that moving your body can improve your mood and increase positive feelings. For example, a 20-minute walk counts as exercise! |

Table 4: How Google Assistant responds to different dialog types

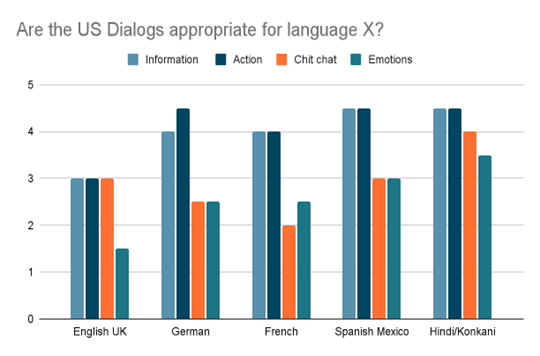

To explore the subject without relying on pre-existing patterns, I set up an exploratory study in which participants were presented with different U.S. English dialogs in the four dialog categories and asked to score them from 0 to 5 depending on how appropriate they considered only the content of the reply while disregarding grammar and syntax.

In the second part, they could explain their notation. The questionnaire was kept in an open format (as opposed to a comparative multiple choice) to again avoid the challenge of representativeness heuristics by limiting the replies and stereotyping dialogs, possibly forcing the participants to choose a reply which might not be the best answer for their language.

There are a couple of limitations to the study that need to be considered when analyzing the results: the first one is the limited number of participants – 72 people took part in the study, all of them aged between 18 and 40 years old. Considering the number of voice assistant users, this sample should not be considered representative, and I only included the languages for which I received at least ten pieces of feedback. The second limitation is due to the fact that English was used as a base language, even though the evaluation in itself is applied to another language. This could potentially lead to a lack of understanding of the dialog due to insufficient English knowledge or an analysis bias by focusing on the U.S. English phrase syntax instead of the dialog content itself.

For UK English, French and German, it appears that emotional dialogs need to be reworked, while only small changes are necessary for Hindi. It is important to keep in mind that the results do not imply that the same changes are required for those three languages. It only implies that a change is needed in those languages for these types of dialogs.

These preliminary results indicate that functional dialogs only require minimal cultural changes, which could be handled with the help of style guides during the localization process. Conversational dialogs, on the other hand, require transcreation based on market research and user testing.

It remains to be seen what these minor and major changes should look like in the different languages, and if they can indeed be summarized in generic style guides similar to those that currently exist for technical writing. A follow-up to the study in 2023 will hopefully offer assistance in designing such guidelines.

Find the complete white paper here: https://tinyurl.com/howtolocalizeachatbot